Implementing LLM Responses with Prediction Guard API

Overview

ManyChat, a popular chat automation tool, is used extensively for customer service. This guide covers two methods to integrate LLM responses using the Prediction Guard API. The first method is straightforward, involving a single question without context, and can be set up quickly without manual coding. The second method is more complex, requiring a lambda function (or similar) to process chat requests, interact with Prediction Guard, and respond via ManyChat’s dynamic block. While it’s possible to manage context solely within ManyChat, it requires significant manual effort and you will possibly lose context after a certain amount of responses.

No Context Example

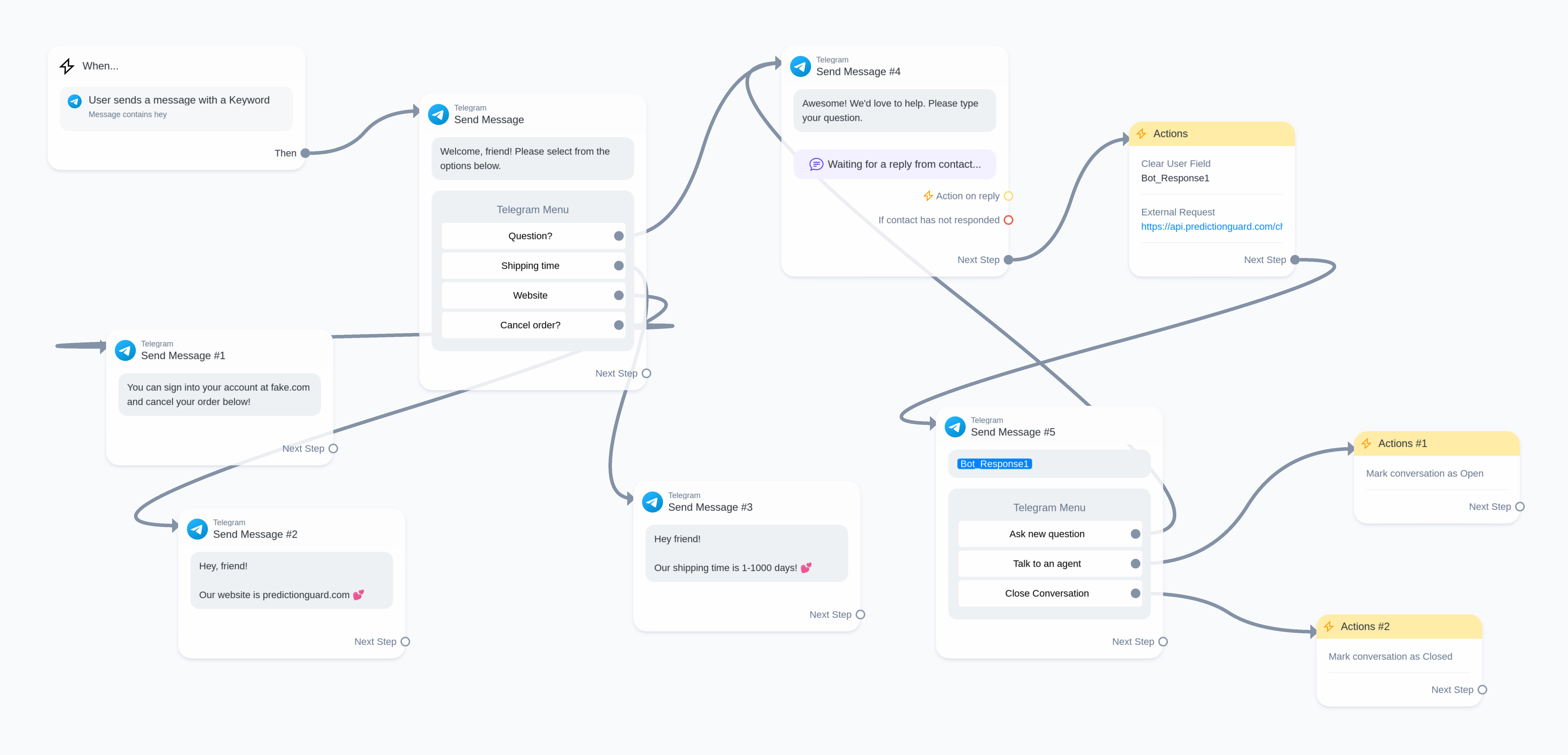

Our goal in this example is to allow the customer to click a button if they need a question answered. This will send a request to the Prediction Guard Chat API endpoint and the response from the API will be sent to the customer as a Telegram message. They can then choose to ask a new question, speak to an agent, or close the conversation.

Prerequisites

- Prediction Guard API Key

- Sign up for ManyChat (Premium account required)

- Create two ManyChat User Custom fields (User_Question1, Bot_Response1)

- Create a Telegram bot

Video Tutorial:

Here is a video tutorial for more detailed guidance:

Steps

- Create a New Automation: Begin by setting up a new automation.

- Trigger Automation: Select an appropriate context to trigger the automation.

- Create a Telegram Menu: Use a telegram send message block to build a menu. Include a “Question?” button to send user queries to Prediction Guard.

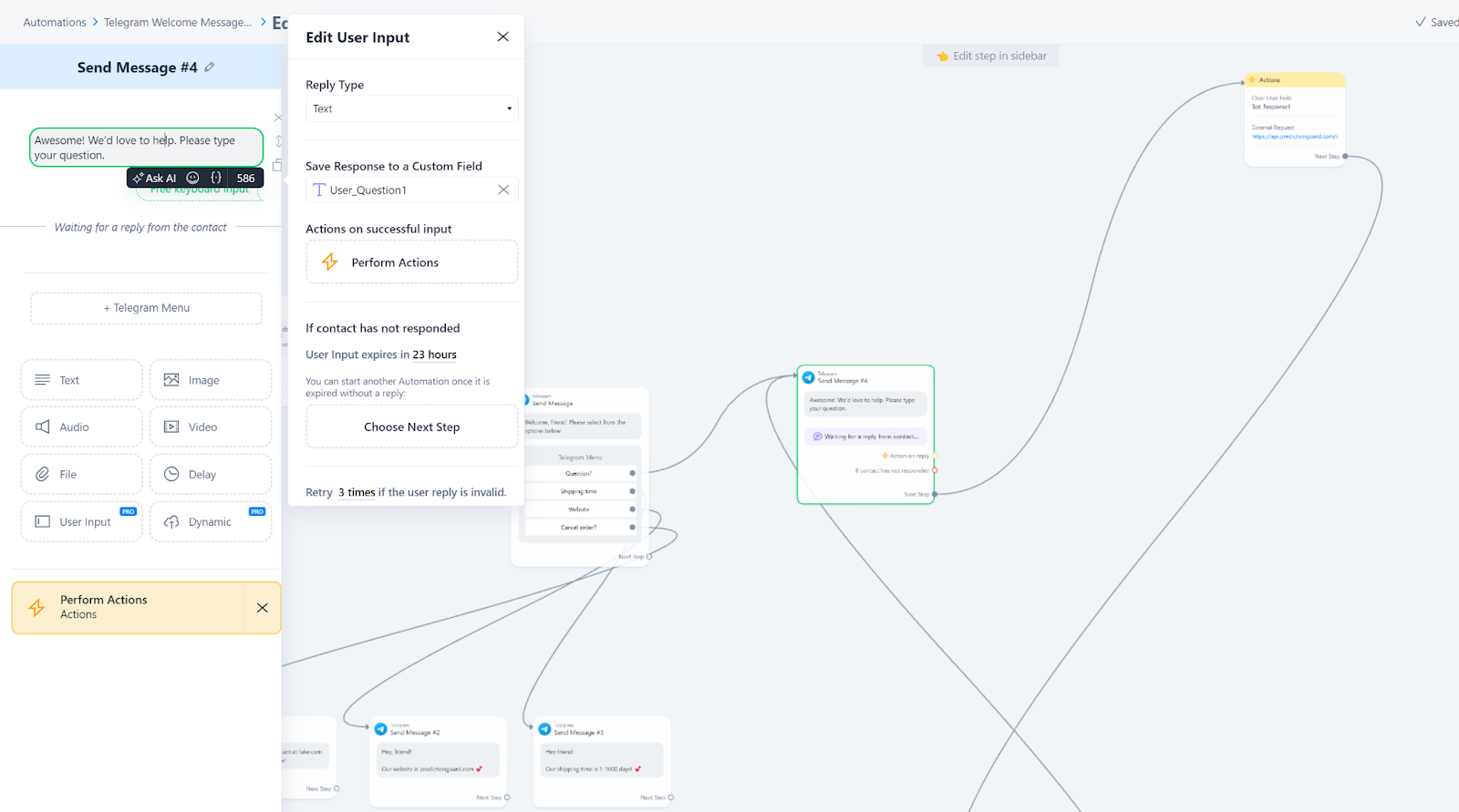

- User Input Block: Add a block for users to input their questions.

- Prompt and Save Response: Prompt users to enter their question and save the response to a custom field (e.g., User_Question1).

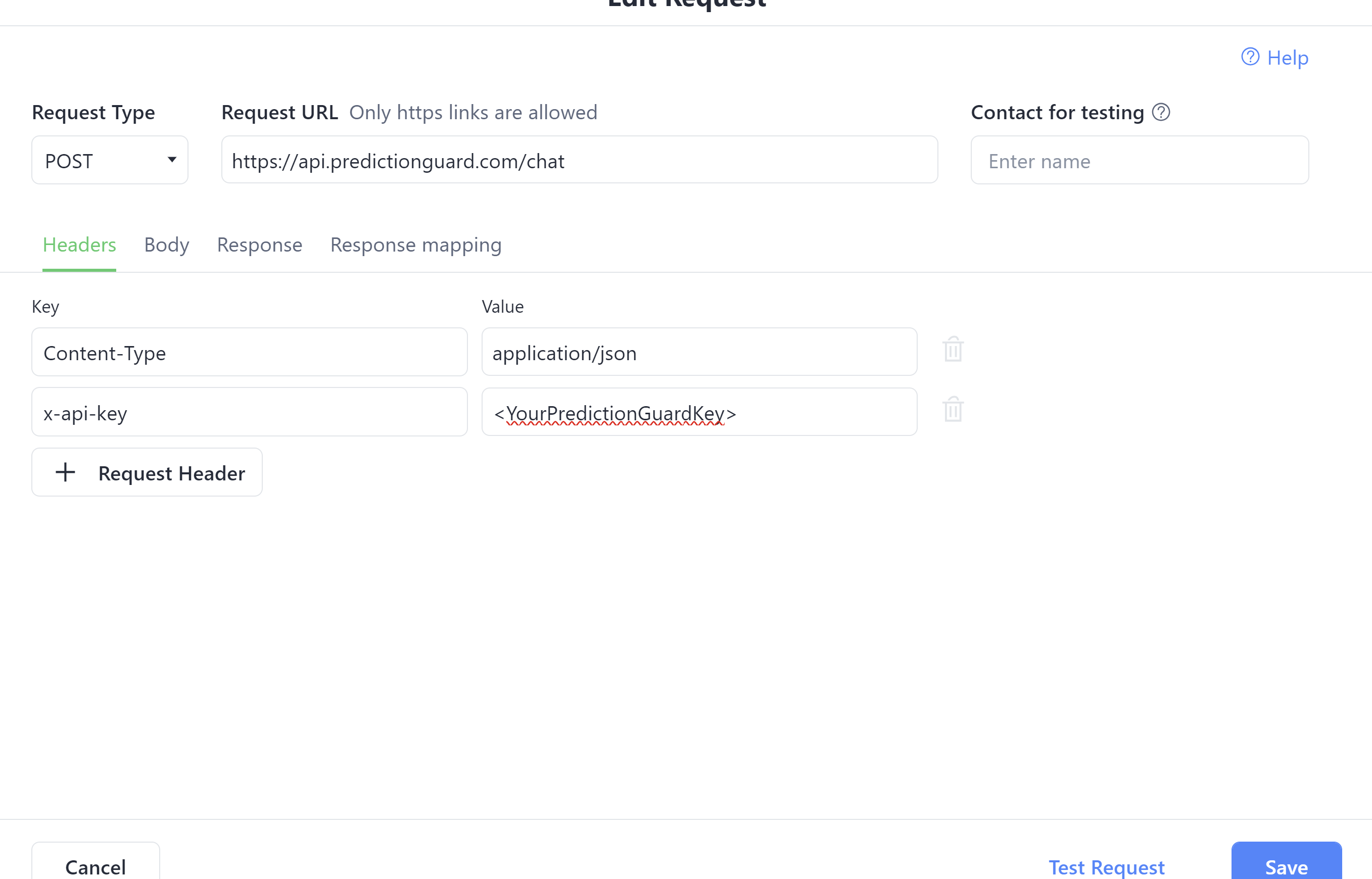

- External Request Action Block: Set up this block for making HTTP Post requests to https://api.predictionguard.com/chat with necessary headers and body.

The body should look something like this (make sure to add the user question field to the request):

- Clear User Field for Bot_Response1: This should be above the External Request Action.

- Test the Response: Ensure that the system works as intended.

- Map Response to Custom Field: Link the API response to the Bot_Response1 custom field. (The JSON path should be something like this : $.choices[0].message.content)

- Create Response Message Block: Set up a block in ManyChat to relay the response to the user (this should output the Bot_Response1 field).

- Provide Additional Options: Include options for users to ask new questions (this would route back to the external request block), speak to an agent, or close the conversation.

After completing this example your flow should look like this:

Include Conversation Context Example

Our goal in this example is to allow the customer to click a button if they need a question answered. However, notably this will include the context of the previous questions. This will send a request to your personal Lambda function url that processes the ManyChat input, makes an API request to PredictionGuard, and formats the response for ManyChat. The ManyChat response will send the message to the customer and also replace/create the text in your context fields in manychat you will create. They can then choose to continue that conversation, ask a new question (which will clear the context fields), speak to an agent, or close the conversation.

Prerequisites

- Your Prediction Guard API Key

- Sign-up for Manychat (must be a premium account)

- Create three Manychat User Custom fields (User_Text, Bot_Text, convo_placeholder)

- Create a telegram bot

- AWS Lambda Function that can be reached by the Internet

Steps

- Create a New Automation: Begin by setting up a new automation.

- Trigger Automation: Select an appropriate context to trigger the automation.

- Create a Telegram Menu: Use a telegram send message block to build a menu. Include a “Question?” button to send user queries to Prediction Guard. This should route to the next step.

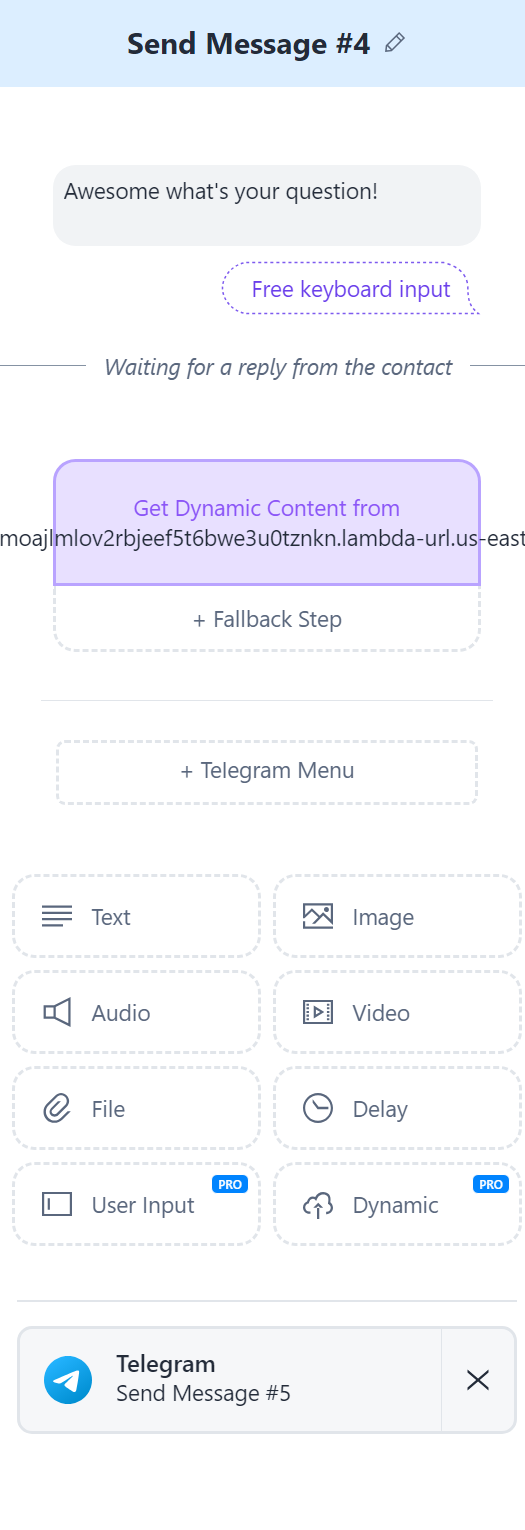

- Use Dynamic Content: Instead of making an External Request Action Block we are actually going to add a “Get Dynamic Content” block. Please make sure to also save the user’s question to the user field convo_placeholder:

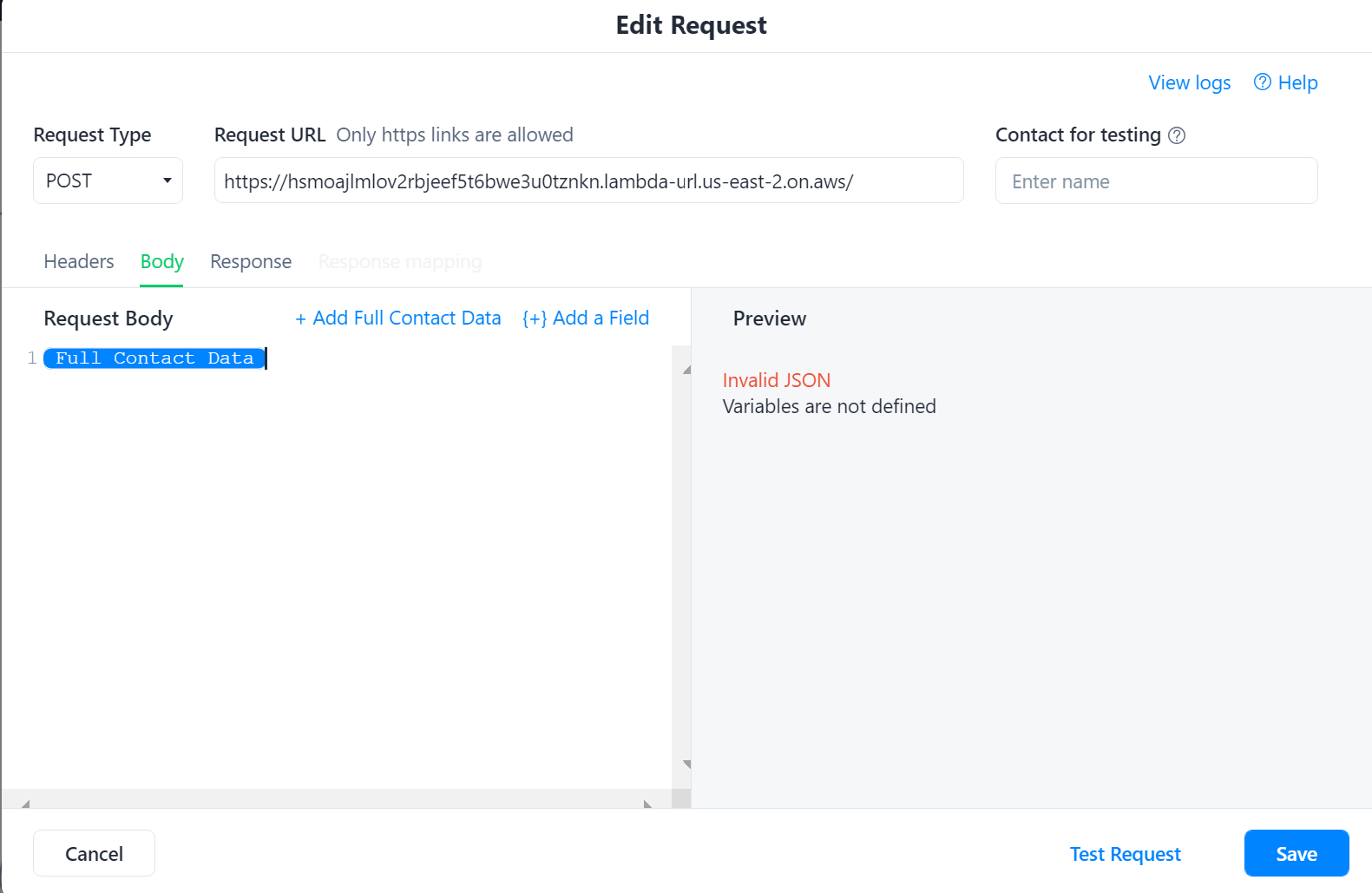

- Send Data to Lambda Function: Configure the block to send “Full Contact Data” to your Lambda function URL:

- Lambda Function Goals:

- Parse Incoming Request.

- Extract Custom Fields.

- Extract Conversation History which we will save to the User_Text, Bot_Text custom TEXT customer fields you should have made. We will not be using an array type due to current limitations at the time of creating this article. However, these should be stored in an array type format so you can programmatically build the Prediction Guard API chat request.

- Append the last customer input to the user messages array (User_Text)

- Prepare Messages for API Request by formatting a request in the format required by the Prediction Guard API

- Make API Request to Prediction Guard and Process Response

- Append the Prediction Guard chat response to the bot messages array (Bot_Text)

- Format ManyChat Response (it must be formatted as noted in this doc, please make sure to note what platform you are using) This will respond to the user and also save over the new User_Text, Bot_Text with the new complete context.

JavaScript Example for Lambda Function

Response Format for ManyChat (Telegram)

Your response to ManyChat for Telegram should look something like this:

-

Configure the rest of the flow

- The customer should be able to continue the conversation. This should just route back to the dynamic request without clearing the user fields.

- The customer should also be able to ask a new question, which should clear the three user fields. This action clears the context of the conversation.

- Finally, it is best to provide a way for a person to reach a real human agent and close the ticket if they so desire.

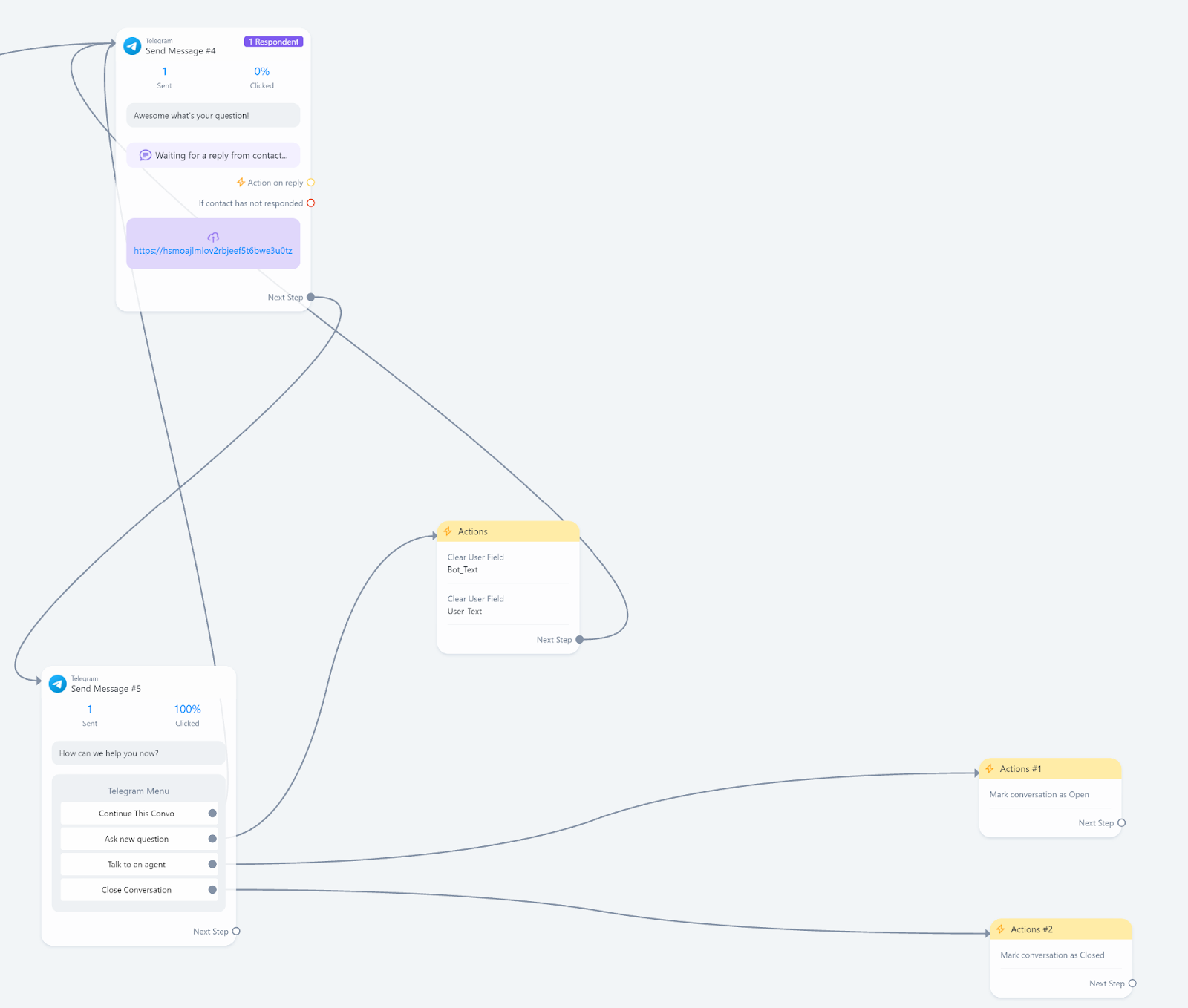

After completing this your flow should look like this:

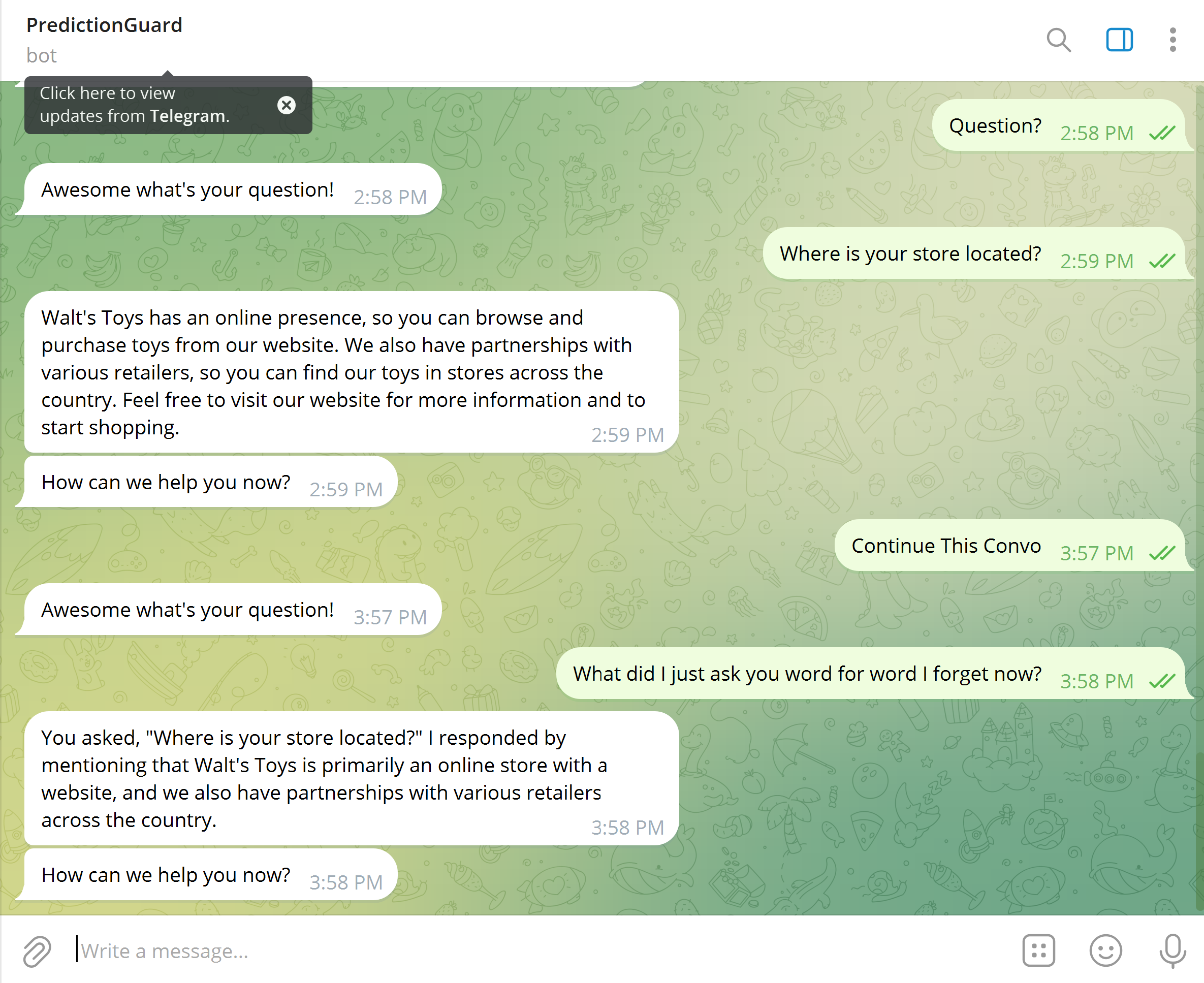

If you followed this example your Telegram bot should be able to respond with the context of the entire conversation:

Happy chatting!